Welcome to

Opera of the Future

Group Members and Projects

Hover and click to learn more about each of our current members, with recent and ongoing projects below.

The SensiTurn is a MediMusical Instrument created as part of the Mass General Hospital Vascular Neurology study. The portable, plug-and-play device emits rich, sonorous music based upon patient rotary motion supporting motor recovery and diadochokinesis with biofeedback. Imbued with over 30 sensors, the instrument precisely captures hand and finger placement and relays this data to clinicians and researchers.

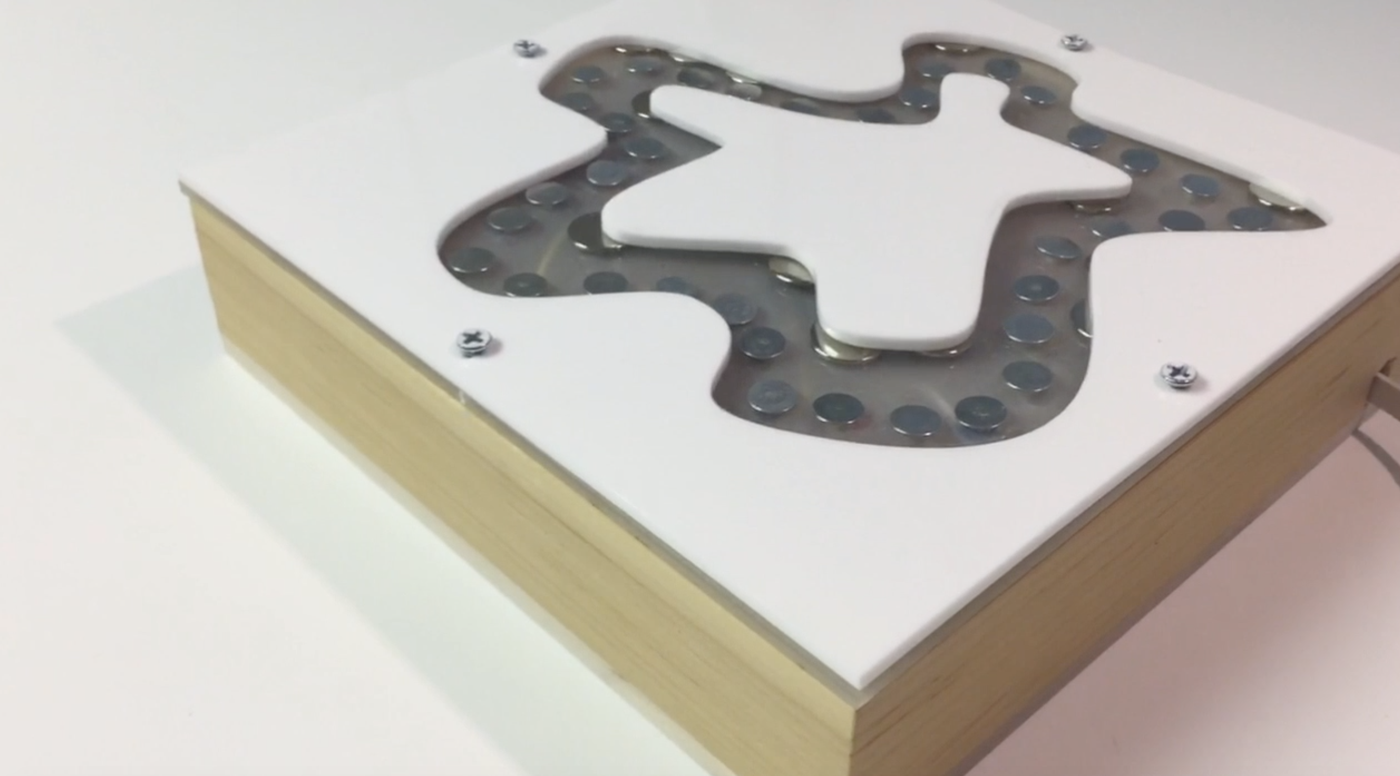

The LabyrSense is a rehabilitative labyrinth with sensory feedback. Underpinned by early research explorations with MGH, the Labyrsense is a reinvention of a traditional medical intervention allowing for increased efficacy.

The MOON Instrument features a capacitive interface that delivers multisensory gamma stimulation through audio, visual, haptic, and tactile feedback. In collaboration with the Aging Brain Alzheimer’s Initiative at MIT, the Gamma MOON combines a novel frequency treatment form-factor with a soothing musical experience.

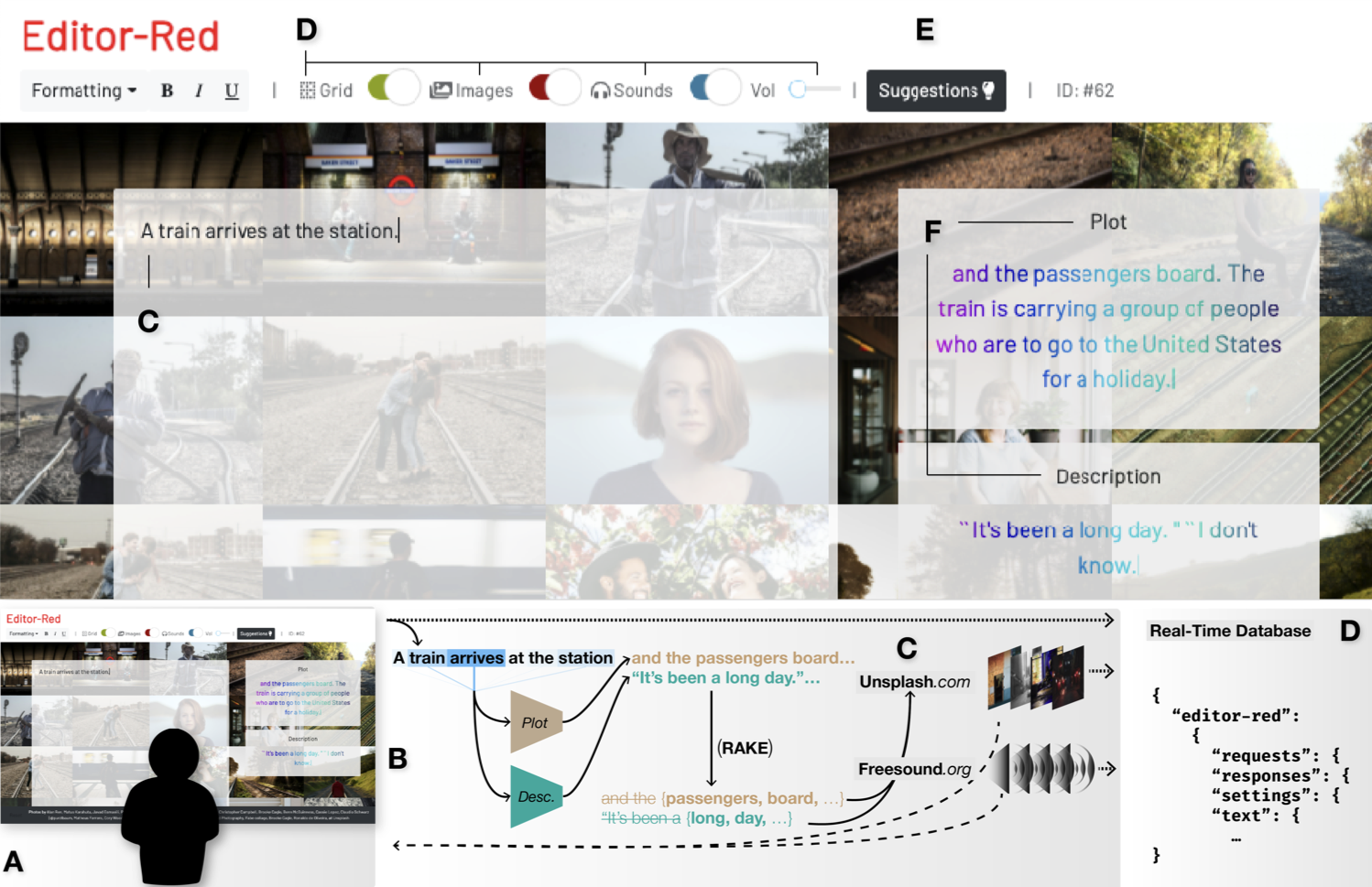

We study how writers engage AI suggestions in writing stories. Our system provides text, image, and audio suggestions. Our results show different ways suggestions are integrated by writers. Work co-led with Guillermo Bernal (Media Lab, Fluid Interfaces) and Daria Savchenko (Harvard Anthropology).

Where to Hide a Stolen Elephant: Leaps in Creative Writing with Multimodal Machine Intelligence accepted to ACM Transactions on Computer-Human Interaction (ToCHI) 2022 (Special Issue on Computational Approaches in HCI).

Suite of tools for working with large and unstructured collections of audio recordings. Includes methods for curation, visualization, and new musical composition.

The Sound Sketchpad: Expressively Combining Large and Diverse Audio Collections published at ACM Intelligent User Interfaces (IUI) 2021.

Masters Thesis (2021) - Living, Singing AI: An evolving, intelligent, scalable, bespoke composition system.

Master's Thesis

Music that pays attention to the listener: An interactive genreative music system that evolves based on the micro and macro movements of a listener as they groove.

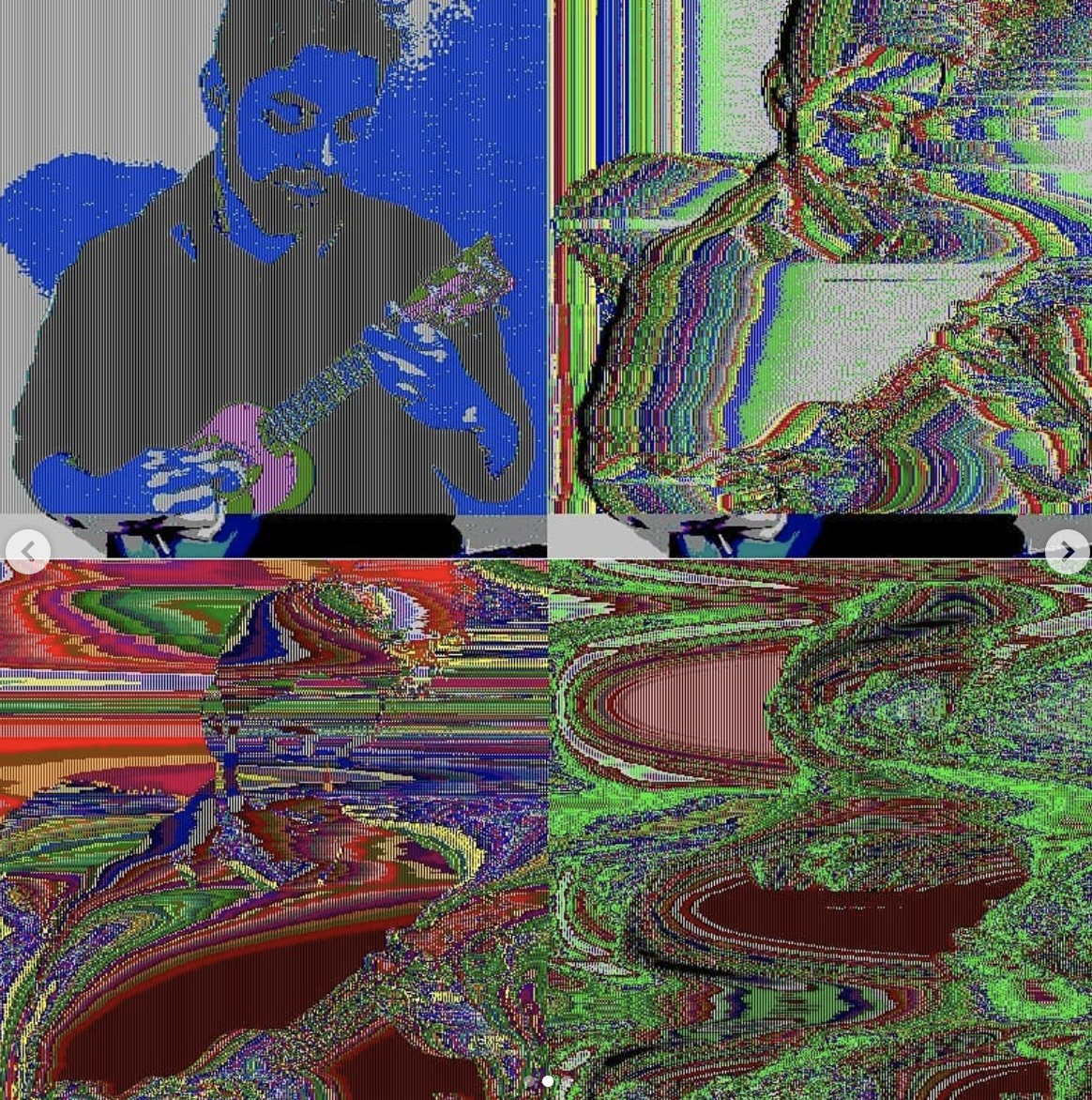

Audio effects (chorus / phasor / reverb) applied to an image to create glitches.

VocalCords explores the design of a new digital music instrument which invites tactile interaction and experience with the singing voice. Initial prototypes of the project have made use of conductive rubber cord stretch sensors which manipulate the audio processing on the voice as the cords are pulled, establishing a striking relationship between physical and musical tension. Through this work, I aim to explore the potential of touch-mediated vocal performance, as well as how this added tactile interaction may alter our experience with, and perception of, our singing voices."

Project PageThe Twelve Mics of Christmas is an interactive installation composed of a Christmas tree shrouded in lights, ornaments, and a series of twelve small shotgun microphones placed all around the tree that are patched to real-time vocal filters that modify how the voice sounds, and what the voice controls.

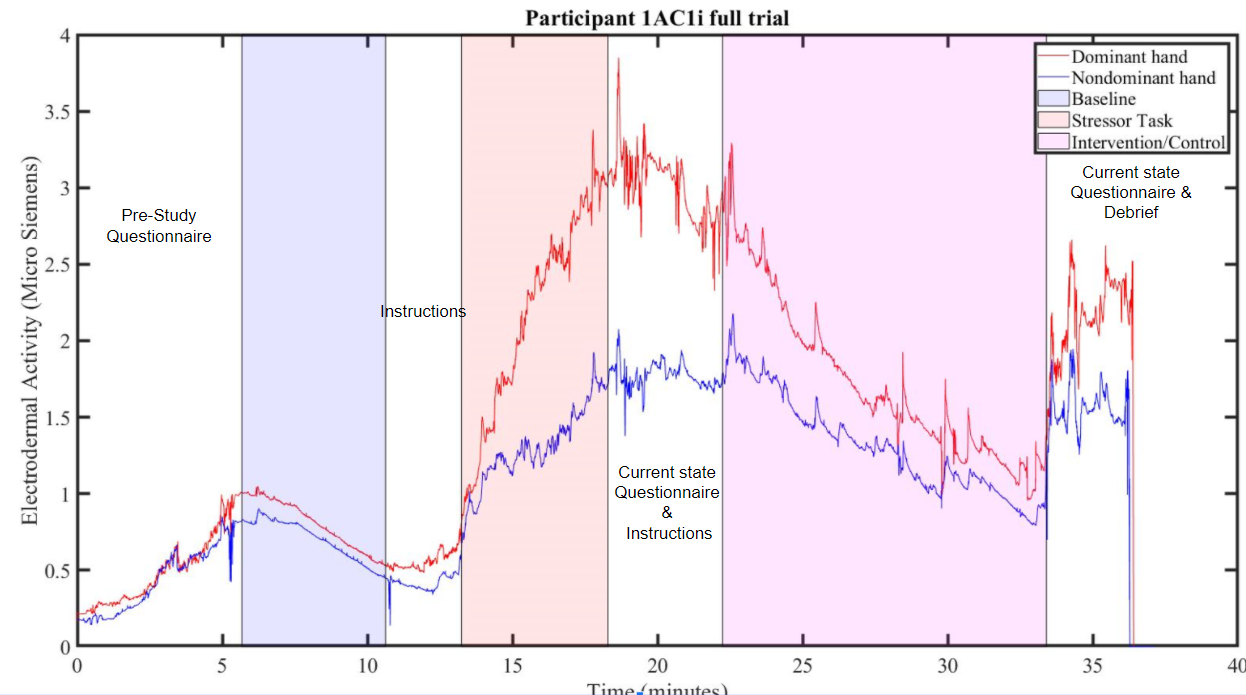

We explored the extent to which a musical/medical interface rooted in both self-reported data and physiological measurement (heart rate and electrodermal activity) can lead to new insights into the study of music as an analgesic tool. During our pilot study, we found that participants' self-reported stress significantly decreased when interacting with our novel interface compared to the control condition.

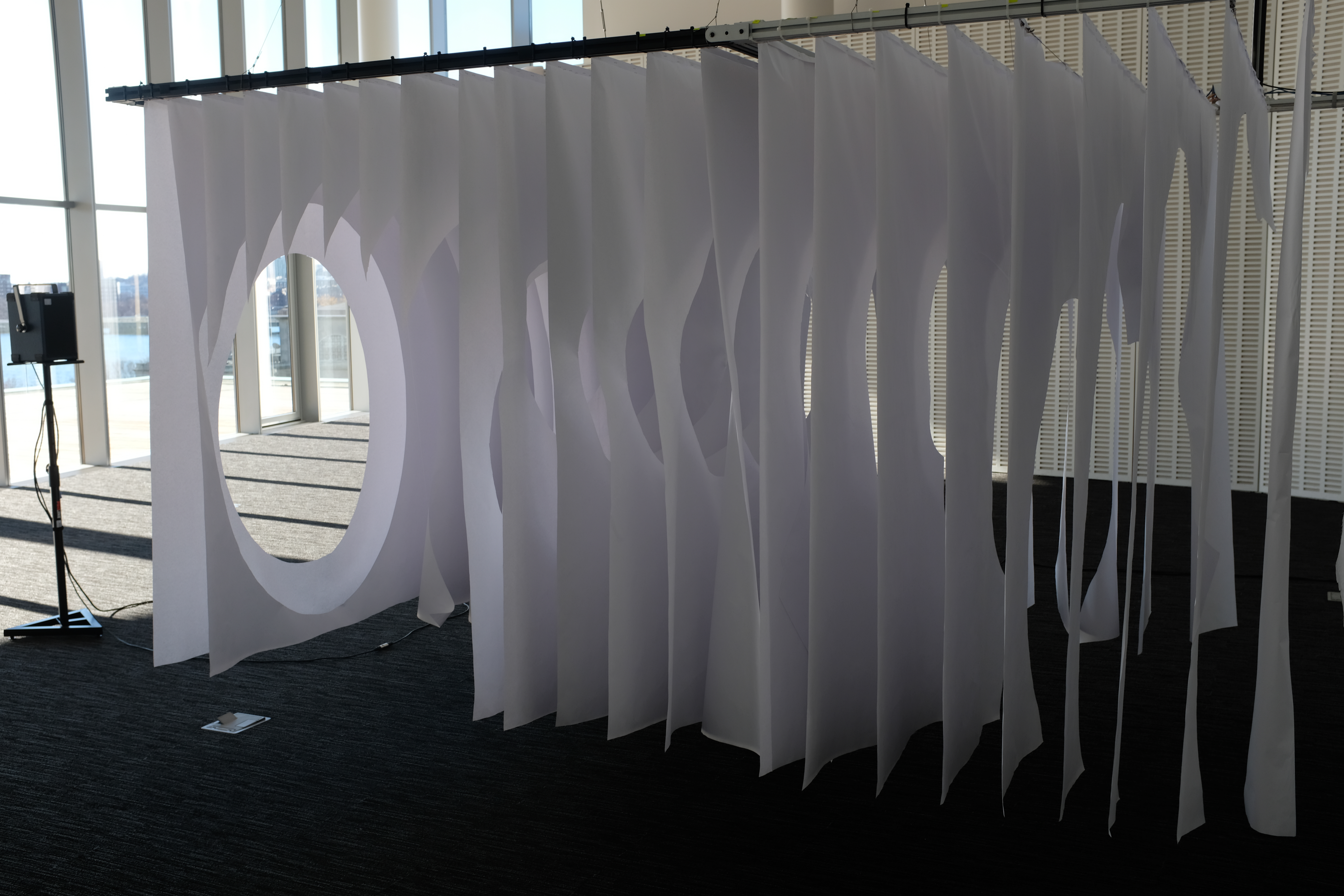

Textile Transmit is an immersive, movement-driven installation that explores the physicality of sound, paper, and fabric. A composition of the sounds of rustling and crumpling paper and fabric plays through a 24’L x 8’W x 8’H interactive environment composed of hanging panels of those same materials. Visitors navigate through tunnel-like paths carved into these panels, allowing them to move through the space from end to end. Though their physical and visual access was purposefully limited, sound travels through the installation space unhindered. Camera tracking and physical sensors allow for the composition to be weighted between polar ends of fabric and paper. Visitors’ motion through the installation causes the sound to remix and redistribute, with a greater density of visitors within the fabric end of the installation producing an influx of paper sound, and vice versa. The objective of this design was to entice those within the space to further explore the environment while rethinking their definition of “instrument”. Visitors physically interact with the sound around them by simply brushing against interspersed fabric and paper panels outfitted with piezoelectric sensors. As such, visitors can “play” the installation in harmony with the backing composition - instead of creating a sonic response by interacting with the surface of the instrument, users cause change by moving inside.

The objective of this research is to recognize and utilize a performer’s movement and breathing patterns as legitimate and necessary data inputs for musical artificial intelligence in a live performance setting. Working with professional musicians across all genres for the first iteration of this research, we are utilizing a wide array of sensors and motion-capture systems to define a musically informed mapping between the movement of the performer and the latent space of a generative audio model. In doing so, we draw from the idea that “to listen to music is to perceive the actions of [human] bodies, and a kind of sympathetic, synchronous bodily action [...] is one primary response” (Iyer 2014). We are therefore departing from previous approaches in the field that foreground audio input by instead highlighting the role of embodiment in musical improvisation.

Joint work with Jessica Shand.

The objective of this research is to recognize and utilize a performer’s movement and breathing patterns as legitimate and necessary data inputs for musical artificial intelligence in a live performance setting. Working with professional musicians across all genres for the first iteration of this research, we are utilizing a wide array of sensors and motion-capture systems to define a musically informed mapping between the movement of the performer and the latent space of a generative audio model. In doing so, we draw from the idea that “to listen to music is to perceive the actions of [human] bodies, and a kind of sympathetic, synchronous bodily action [...] is one primary response” (Iyer 2014). We are therefore departing from previous approaches in the field that foreground audio input by instead highlighting the role of embodiment in musical improvisation.

Joint work with Manuel Cherep.

Membranas is an essay on sonic emergence and practices of blurring. It explores the vibratory—particularly the sonic through its aural and tactile manifestations—as intrinsically collective media that can activate more intimate and intricate ways of relating to each other and the world we are part of. This is a resonant investigation that proposes a body of work derived from the possibilities within the concept of La Membrana, an organizational apparatus for tuning into our vibrational reality. This installation is meant to be a continuous experiment and test-bed for exploring resonance and improvisation as ways of stimulating more-than-human arrangements for collective emergence.

More Projects

Contact

Please get in touch at a2tod@media.mit.edu.